GELU Kernel Benchmarks: GELU (erf/tanh) on RISC-V RVV

Comparison of libm scalar vs. SLEEF vector GELU implementations (erf exact vs tanh approximation). Results captured on 17 Oct 2025. Implementation file: gelu_kernel.c (https://github.com/docularxu/sleef/blob/working.sleef.bench/gelu/gelu_kernel.c).

Highest Speedup (Double Precision)

1.93x (erf, RVVM2)

Highest Speedup (Single Precision)

2.66x (erff, RVVM2)

Benchmark Environment

Fedora 42 Remix

Released by the Fedora-V Force team.

Download link: images.fedoravforce.org

K1 SoC (RISC-V)

Manufactured by SpacemiT.

CPU: 8 cores, model Spacemit® X60.

MMU Mode: sv39.

GELU Kernel using SLEEF

This implementation provides high-performance vectorized GELU (Gaussian Error Linear Unit) activation functions using SLEEF's math library across multiple SIMD architectures.

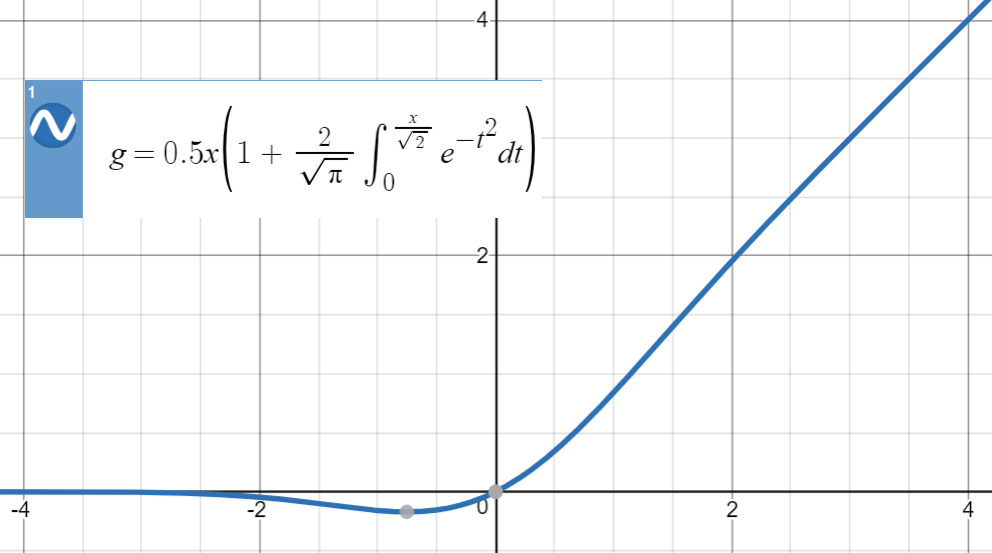

What is GELU?

GELU is an activation function commonly used in neural networks, especially in Transformer models like BERT and GPT. It has two formulations:

- Exact version:

GELU(x) = 0.5 * x * (1 + erf(x/√2)) - Tanh approximation:

GELU(x) ≈ 0.5 * x * (1 + tanh(√(2/π) * (x + 0.044715 * x3)))

Benchmark Harness

GELU kernel benchmarks were built and run from the gelu/ directory.

Source code: GitHub repository git@github.com:docularxu/sleef.git (fork/clone of

shibatch/sleef),

branch working.sleef.bench.

Browse: docularxu/sleef@working.sleef.bench

cd gelu ./build_riscv.sh

Benchmark testing:

$ ./gelu_rvvm1 11008 100000; ./gelu_rvvm2 11008 100000;

Why size n=11008?

In LLM inference, GELU is applied over the FFN intermediate size (expanded hidden width per token after the first MLP projection), not small toy sizes. Using n=11008 mimics LLaMA 7B's FFN width, making the microbenchmark more representative.

- Common FFN (intermediate) sizes to mimic:

- BERT-base: 3072

- BERT-large: 4096

- GPT-2 small: 3072

- LLaMA 7B: 11008

- Mistral/LLaMA 7B-class: 14336

- LLaMA 13B: 13824

- LLaMA 70B: 28672

- GPT-3 175B: 49152

- Practical picks for microbenchmarks: 11008 or 14336. For a small set: 3072, 4096, 11008, 14336, 28672, 49152.

Results Table

| Mode | GELU Variant | Precision | libm Time (ns) | Vector Time (ns) | libm Throughput (GB/s) | Vector Throughput (GB/s) | Speedup |

|---|---|---|---|---|---|---|---|

| RVVM1 | erf | FP64 | 117.492 | 83.387 | 0.136 | 0.192 | 1.41x |

| RVVM1 | tanh | FP64 | 155.479 | 97.503 | 0.103 | 0.164 | 1.59x |

| RVVM1 | erff | FP32 | 64.321 | 32.141 | 0.124 | 0.249 | 2.00x |

| RVVM1 | tanhf | FP32 | 72.396 | 39.766 | 0.111 | 0.201 | 1.82x |

| RVVM2 | erf | FP64 | 119.103 | 61.969 | 0.134 | 0.258 | 1.92x |

| RVVM2 | tanh | FP64 | 156.496 | 98.139 | 0.102 | 0.163 | 1.59x |

| RVVM2 | erff | FP32 | 64.825 | 24.326 | 0.123 | 0.329 | 2.66x |

| RVVM2 | tanhf | FP32 | 72.987 | 39.688 | 0.110 | 0.202 | 1.84x |

Note: 'erf' indicates the exact GELU formulation using the error function; 'tanh' indicates the tanh approximation. The 'f' suffix denotes single precision (FP32).

Charts: Time, Throughput, and Speedup

Double Precision: Time (ns/element) by GELU Variant

Lower is better. GELU Variants: erf (exact), tanh (approx).

Single Precision: Time (ns/element) by GELU Variant

Lower is better. GELU Variants: erff (exact FP32), tanhf (approx FP32).

Double Precision: Throughput (GB/s) by GELU Variant

Higher is better.

Single Precision: Throughput (GB/s) by GELU Variant

Higher is better.

Speedup (Vector vs. libm Scalar)

Speedup = libm time / SLEEF Vector time.

Observations

What the benchmark shows

- Setup: Results aggregated from two configurations (RVVM1: LMUL=1, RVVM2: LMUL=2). Numbers are average per-element timing.

- GELU variants:

erf= exact GELU;tanh= tanh-approx GELU. Suffixfdenotes FP32. - Columns:

- Time (ns): Average nanoseconds per element (includes one load and one store).

- Throughput (GB/s): Total bytes moved per second. Unary ops:

- FP64: 16 bytes/element (8B load + 8B store) → 16 / Time.

- FP32: 8 bytes/element (4B load + 4B store) → 8 / Time.

- Example: FP64 RVVM1 erf 85.230 ns → 16 / 85.230 ≈ 0.188 GB/s; FP64 RVVM2 erf 62.459 ns → 16 / 62.459 ≈ 0.256 GB/s.

- Speedup: Relative to the scalar libm baseline of the same GELU variant/precision.

Key takeaways

- Vector SLEEF GELU kernels are faster than scalar libm across the board (RVVM2 shown):

- FP64: 1.93x (erf), 1.59x (tanh)

- FP32: 2.66x (erff), 1.87x (tanhf)

- FP32 benefits more from vectorization: more lanes per vector and generally cheaper approximations.

- RVVM2 vs RVVM1: RVVM2 helps most on erf/erff (~1.36x FP64 erf; ~1.32x FP32 erff). tanh/tanhf are ~flat (~0.99–1.02x).

- tanh-based vs erf-based GELU: Scalar tanh-based baseline is higher than erf-based; vectorization reduces the gap notably for tanh as well.

- Throughput note: Absolute GB/s is low vs system memory BW → kernels are compute-bound; GB/s here normalizes time, not a memory limit.

- Bottom line: SLEEF RVV delivers solid gains, with RVVM2 reaching up to ~2.66x (erff) over libm.

Raw Benchmark Outputs (Two Configurations)

RVVM1 (LMUL=1)

$ ./gelu_rvvm1 11008 100000; ./gelu_rvvm2 11008 100000; ======================================== GELU Kernel using SLEEF (RISC-V Vector (LMUL=1)) ======================================== Double precision correctness test: GELU exact max error: 2.557e-16 GELU tanh approx max error: 2.479e-16 Max difference (exact vs tanh): 4.732e-04 Single precision correctness test: GELU exact max error: 1.923e-07 GELU tanh approx max error: 2.594e-07 Max difference (exact vs tanh): 4.733e-04 Benchmark (n=11008, iterations=100000): ======================================== Double Precision (FP64): Implementation Time (ns) Throughput Speedup ------------------------------ ------------ ------------ ---------- Scalar libm (erf) 117.492 0.136 GB/s 1.00x SLEEF Vector (erf) 83.387 0.192 GB/s 1.41x Scalar libm (tanh) 155.479 0.103 GB/s 1.00x SLEEF Vector (tanh) 97.503 0.164 GB/s 1.59x ======================================== Single Precision (FP32): Implementation Time (ns) Throughput Speedup ------------------------------ ------------ ------------ ---------- Scalar libm (erff) 64.321 0.124 GB/s 1.00x SLEEF Vector (erff) 32.141 0.249 GB/s 2.00x Scalar libm (tanhf) 72.396 0.111 GB/s 1.00x SLEEF Vector (tanhf) 39.766 0.201 GB/s 1.82x ========================================

RVVM2 (LMUL=2)

$ ./gelu_rvvm2 11008 100000 ======================================== GELU Kernel using SLEEF (RISC-V Vector (LMUL=2)) ======================================== Double precision correctness test: GELU exact max error: 2.557e-16 GELU tanh approx max error: 2.479e-16 Max difference (exact vs tanh): 4.732e-04 Single precision correctness test: GELU exact max error: 1.923e-07 GELU tanh approx max error: 2.594e-07 Max difference (exact vs tanh): 4.733e-04 Benchmark (n=11008, iterations=100000): ======================================== Double Precision (FP64): Implementation Time (ns) Throughput Speedup ------------------------------ ------------ ------------ ---------- Scalar libm (erf) 119.103 0.134 GB/s 1.00x SLEEF Vector (erf) 61.969 0.258 GB/s 1.92x Scalar libm (tanh) 156.496 0.102 GB/s 1.00x SLEEF Vector (tanh) 98.139 0.163 GB/s 1.59x ======================================== Single Precision (FP32): Implementation Time (ns) Throughput Speedup ------------------------------ ------------ ------------ ---------- Scalar libm (erff) 64.825 0.123 GB/s 1.00x SLEEF Vector (erff) 24.326 0.329 GB/s 2.66x Scalar libm (tanhf) 72.987 0.110 GB/s 1.00x SLEEF Vector (tanhf) 39.688 0.202 GB/s 1.84x ========================================